Intel chief executive Pat Gelsinger said that its upcoming “Meteor Lake” 14th-gen Core processors will usher in the era of the “AI PC,” a development he said rivalled the launch of the Centrino integrated platform that helped bring Wi-Fi into the notebook market.

Intel’s Meteor Lake is on track for a launch during the current third quarter of 2023, and will feature a dedicated AI engine known as Intel AI Boost. Gelsinger provided an updated roadmap during the company’s second quarter 2023 earnings call.

Intel’s Gelsinger has said previously that Meteor Lake would be synonymous with AI, though the company has shipped Movidius AI add-on cards alongside its 13th-gen Core chips. Those chips, which have appeared in laptops like the Samsung Galaxy Book3 Ultra, really haven’t been able to tap any “AI” functions besides Microsoft-driven enhancements to video calls placed with Windows, known as Windows Studio Effects. (AMD has a rival effort, known as Ryzen AI.) That, for now, has left any concept of an AI PC without much meaning, though Gelsinger clearly believes an AI renaissance is in the cards.

Thursday may have been the first time Gelsinger used the term “AI PC,” which he used to hearken back to the days of the Centrino processor. Intel bundled a mobile processor, chipset, and Wi-Fi component together within notebooks in the early 2000s, and branded the combination as “Centrino.” Gelsinger also ran Intel’s Enterprise Group in 2005 when Intel reorganized the company to adopt a similar strategy. Gelsinger told analysts that Intel “firmly believes in this idea of democratizing AI and opening the software stack,” creating an AI industry ecosystem.

“Importantly, we see the AI PC as a critical inflection point for the PC market over the coming years that will rival the importance of Centrino on Wi Fi in the early 2000s,” Gelsinger said during the call. “And we believe that Intel is very well positioned to capitalize on the emerging growth opportunity.”

AI must run on the PC, Intel says

So far, however, AI has primarily lived in the cloud. AI-enabled chatbots like Bing Chat, Google’s Bard, and OpenAI’s ChatGPT all run on a server, and Qualcomm’s partnership with Meta to run its Llama 2 large-language model on its Snapdragon processors is one of the first client-side AI announcements. Gelsinger, however, hopes to change that. And the first thing the industry needs to do is establish an ecosystem of AI-infused client applications.

“The real question is what applications are going to become AI enabled,” Gelsinger said. “And today you’re starting to see that people are going to the cloud and you know, goofing around with ChatGPT writing a research paper. That’s like super cool, right?”

Mark Hachman / IDG

But Gelsinger added that “you can’t [make a] round trip to the cloud” for similar applications, such as generating additional content in games, productivity apps, and AI-infused creativity apps like Adobe Photoshop and its Generative Fill and Expand functions.

“That must be done on the client for that to occur, right? “Gelsinger added. “You can’t go to the cloud, you can’t round trip to the cloud,” because of the cost and latency those trips require.

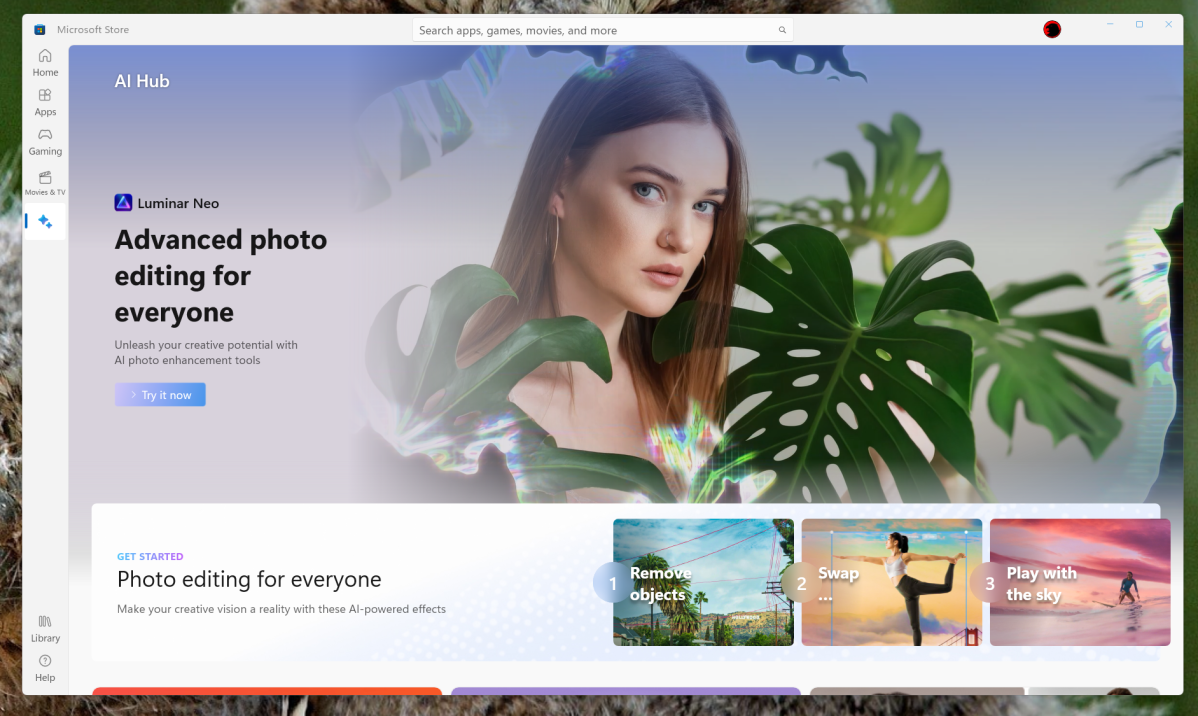

Microsoft has quietly tried to create an AI Hub within the Microsoft Store app, featuring apps like Luminar Neo and Lensa. But without dedicated processors to run them, the apps tend to run on a local CPU or GPU.

The answer, Gelsinger said, was to infuse local processors with AI instead. “We expect that Intel… is the one that’s going to truly democratize AI at the client and at the edge,” he said. “And we do believe that this will become a [market driver] because people will say oh, I want those new use cases. They make me more efficient and more capable, just like Centrino made me more efficient, because I didn’t have to plug into the wire.”

Otherwise, Gelsinger and Intel said that Intel remains on track with its existing process roadmap: Intel 7 is ramping now, Intel 4 will begin with Meteor Lake in the second half of 2023, and Intel 3, 20A, and the Intel 18A process are still on Intel’s previous schedule.