A top AMD executive says that the company is thinking about deeper integration of AI across the Ryzen product line, but one ingredient is missing: client applications and operating systems that actually take advantage of them.

AMD launched the Ryzen 7000 series in January, which includes the Ryzen 7040HS. In early May, AMD announced the Ryzen 7040U, a lower-power version of the 7040HS. Both are the first chips to include Ryzen AI “XDNA” hardware, among the first occurrences of AI logic for PCs.

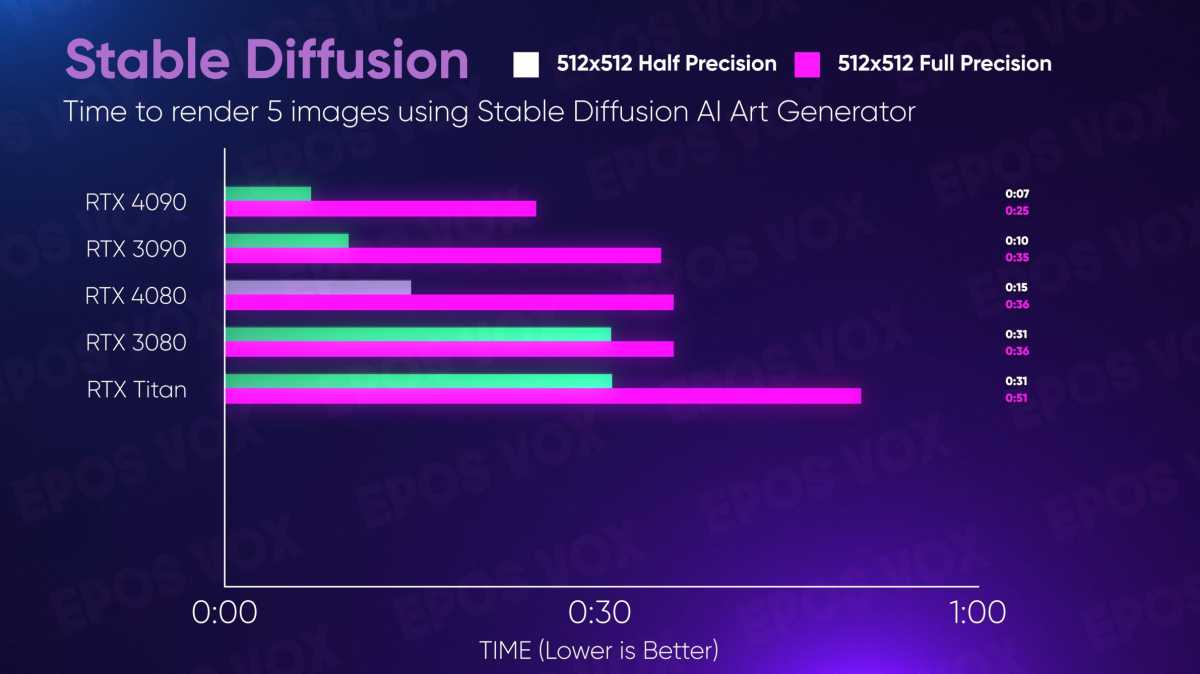

So far, however, AI remains a service that (aside from Stable Diffusion and some others) exclusively runs in the cloud. That makes AMD’s venture risky. Why dedicate costly silicon towards Inference Processing Units (IPUs), advancing a function that no one can really use on the PC?

That’s one of the questions we put to David McAfee, the corporate vice president and general manager for the client channel business at AMD. The message is, essentially, that you’ll have to trust them—and even reframe the way you think about AI.

According to McAfee, “we’re on the cusp of a series of announcements and events that will help shed more light into what’s happening with AI processing in general,” he said. “We really view those as the tip of the iceberg.”

It’s not clear if McAfee was referring to Google I/O, the Microsoft Build developer conference later this month, or something else entirely. But AMD seems to be planning to take a more bite-sized approach to AI than you might expect.

AMD: AI on the PC will be less complex than you think

Sure, you can run an AI model on top of a Ryzen CPU or a Radeon GPU, provided that you have enough storage and memory. “But those are pretty heavy hammers to use when it comes to doing that type of compute,” McAfee said.

Instead, AMD sees AI on the PC as small, light, tasks that frequently trigger and run on an AI processor known as an Inference Processing Unit (IPU). Remember, AMD has used “AI” for some time to try and optimize its technology for your PC. It groups several technologies under the label of “SenseMI” for Ryzen processors, which adjusts clock speeds using Precision Boost 2, mXFR, and Smart Prefetch. IPUs could take that to another level.

“I think one of the nuances that comes along with the way that we’re looking at at IPUs, and IPUs for the future, is more along the lines of that combination of a very specialized engine that does a certain type of compute, but does it in a very power-efficient way,” McAfee said. “And in a way that’s really integrated with the memory subsystem and the rest of the processor, because our expectation is as time goes on, these workloads that run on the GPU will not be sort of one-time events, but there’ll be more of a — I’m not going to say a constantly running set of workstreams, but it will be a more frequent type of compute that happens on your platform.”

AMD sees the IPU as something like a video decoder. Historically, video decoding could be brute-forced on a Ryzen CPU. But that requires an enormous amount of power to enable a good experience. “Or you can have this relatively small engine that’s a part of the chip design that does that with incredible efficiency,” McAfee said.

That probably means, at least for now, that you won’t see Ryzen AI IPUs on discrete cards, or even with their own memory subsystem. Stable Diffusion’s generative AI model uses dedicated video RAM to run in. But when asked about the concept of “AI RAM,” McAfee demurred. “That sounds really expensive,” he said.

AI’s future within Ryzen

XDNA is to Ryzen AI the way the RDNA is to Radeon: the first term defines the architecture, the second defines the brand. AMD acquired its AI capabilities via its Xilinx acquisition, but it still hasn’t detailed what exactly is in it. McAfee acknowledged that the AMD and its competitors have work to do in defining Ryzen AI’s capabilities in terms that enthusiasts and consumers can understand, such as the number of cores and clock speeds that help define CPUs.

“There is, let’s call it an architecture generation, that goes along with an IPU,” McAfee said. “And what we integrate this year versus what we integrate in a future product will likely have different architecture generations associated with them.”

AMD

The problem is that AI metrics — whether it be core counts per parallel streams or neural layers — simply haven’t been defined for consumers, and there aren’t any generally accepted AI metrics beyond trillions of operations per second (TOPS), and TOPS per watt.

“I think that we probably haven’t gotten to the point where there’s a good set of industry standard benchmarks or industry standard metrics to help users better understand Widget A from AMD versus Widget B from Qualcomm,” McAfee said. “I’d agree with you that the language and the benchmarks are not easy for users to understand which one to pick right now and which one they should be betting on.”

With Ryzen AI deployed to just a pair of Ryzen laptop processors, the natural question is how AMD will begin distributing it to the rest of the CPU lineup. That, too, is being discussed, McAfee said. “I think we’re we’re having the AI conversation all the way across the Ryzen product line,” McAfee said.

Because of the extra cost attached to the manufacturing the Ryzen AI core, AMD is evaluating what value Ryzen AI adds, especially in its budget processors. McAfee said that the end-user benefit “has to be a lot more concrete” before AMD would add Ryzen AI to its low-end mobile Ryzen chips.

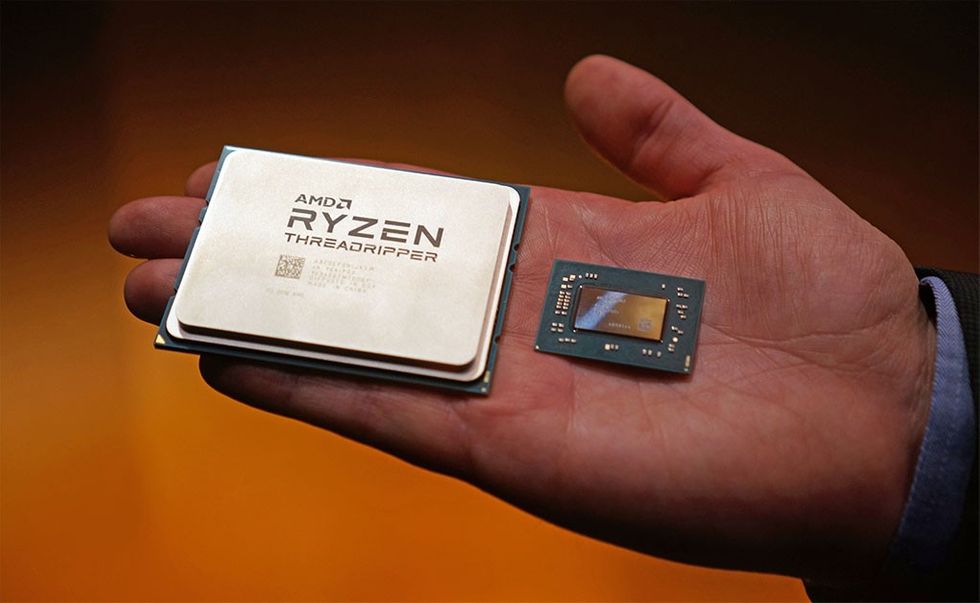

Will AMD add Ryzen AI to its desktop Ryzens? That, somewhat surprisingly, is less sure. McAfee considered the question in terms of the desktop’s power efficiency. Because of the power of the desktop, “maybe Ryzen AI becomes more of a testing tool as opposed to something that is driving the everyday value of the device,” he said. It’s possible that a high-core-count Threadripper could be used to train AI, but not necessarily use it, he added.

AMD

AMD does believe, though, that AI will sit at the table currently occupied by CPUs and GPUs, however.

“I really do believe there will be a point in time in the not-too-distant future where yes, as people think about the value of their system, it’s not just my CPU and my GPU, this, this becomes a third element of compute that that does add significant value to their platform,” McAfee said.

AI’s next steps

Chip evolution has typically followed a fairly simple progression. Developers come up with a new app, and program it to run on a general purpose CPU. Over time, the industry settles on a specific task (video games, say) and specialized hardware follows. Inferencing chips in the datacenter have been developed for years, but app developers are still figuring out what AI can do, let alone what consumers can use it for.

At that point, McAfee says, there will be two reasons for AI applications to run on your PC, rather than in the cloud. “There will be a point in time where those models reach a level of maturity or reach a practical application where it becomes the right step for the developer to quantize that model and to put it on, you know, local AI accelerators that live on a mobile PC platform for, you know, the battery life benefits,” he said.

Adam Taylor/IDG

The other reason? Security, McAfee added. It’s likely that as AI is integrated into business life, that businesses and even consumers will want their own private AI services, so that their personal or business data doesn’t leak into the cloud. “I don’t want a public-facing instance scanning all of my email and documents, and potentially using that,” he said. “No.”

Software’s responsibility

McAfee avoided disclosing what he knew of Microsoft’s roadmap as well as the speculation that Windows 12 may be more closely integrated with AI. Consumer AI applications may include games, such as NPCs that have intelligent conversations, rather than scripted dialogue.

“I think that’s going to be the key,” McAfee said. “Over the next three years, the software and user experiences have to have to deliver that value, and move this from a really exciting, emergent technology that’s just making its way into the conversation on PCs into something that potentially is rather transformational,” he said.

But, McAfee added, AMD, Intel, Qualcomm, and the rest of the hardware industry can’t be solely responsible for the success or failure of AI.

“Ultimately for this to be successful… It really boils down to, you know, does the software live up?” McAfee said. “Does the software and the user experience live up to the hype? I think that’s going to be the key. Over the next three years, the software and user experiences have to have to deliver that value, and move this from a really exciting, you know, sort of emergent technology that’s just making its way into the conversation on PCs, into something that it potentially is rather transformational to the way that we think about performance, and devices, and how we use them.”